Introduction to k-means clustering:

Clustering is all about organizing items from a given collection into groups of similar items. These clusters could be thought of as sets of items similar to each other in some ways but dissimilar from the items belonging to other clusters.

Clustering a collection involves three things:

- An algorithm—This is the method used to group the items together.

- A notion of both similarity and dissimilarity—which items belong in an existing stack and which should start a new one.

- A stopping condition—this might be the point beyond which books can’t be stacked anymore, or when the stacks are already quite dissimilar.

Running k-means clustering in Apache Mahout:

k-means algorithm in Apache Mahout is mainly for text processing, if you need to process some numerical data, you need to write some utility functions to write the numerical data into sequence-vector format. For the general example “Reuters”, the first few Mahout steps are actually doing some data processing.

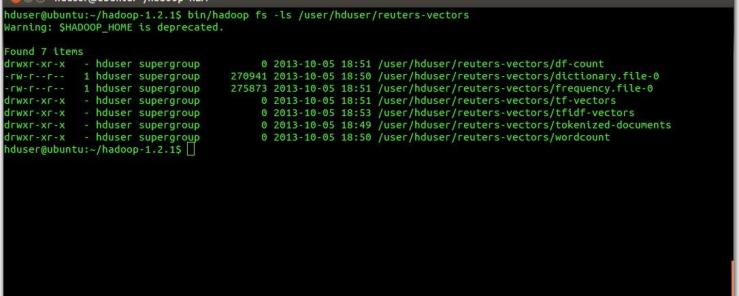

To be explicit, for Reuters example, the original downloaded file is in SGML format, which is similar to XML. So we need to first parse these files into document-id and document-text. For this preprocessing job, a much quicker way is to use the Reuters parser given in the Lucene benchmark JAR file. After that we can convert the file into sequenceFiles. SequencesFiles is a file with structure of key-value format. Key is the document id and value is the document content. This step will be done using ‘seqdirectory’, a built-in method in Apache Mahout to convert input files into sequenceFiles. We need to then convert the sequenceFile to index frequencey vectors using mahout’s ‘seq2sparse‘ built-in method. The generated vectors dir should contain the following items:

- reuters-vectors/df-count

- reuters-vectors/dictionary.file-0

- reuters-vectors/frequency.file-0

- reuters-vectors/tf-vectors

- reuters-vectors/tfidf-vectors

- reuters-vectors/tokenized-documents

- reuters-vectors/wordcount

We will then use tfidf-vectors to run kmeans. You could give a ‘fake’ initial center path, as given argument k, mahout will automatically random select k to initial the clustering. On successfully running k-means algorithm, we could use mahout’s ‘clusterdumper‘ utility to export the data from HDFS to a text or a csv file.

The following are the actual codes that are written while performing this task.

Get the data from:

wget http://www.daviddlewis.com/resources/testcollections/reuters21578/reuters21578.tar.gz

Place it within the example folder from mahout home director:

mahout-0.7/examples/reuters

mkdir reuters

cd reuters

mkdir reuters-out

mv reuters21578.tar.gz reuters-out

cd reuters-out

tar -xzvf reuters21578.tar.gz

cd ..

Mahout Commands

#1 Run the org.apache.lucene.benchmark .utils.ExtractReuters class

${MAHOUT_HOME}/bin/mahout

org.apache.lucene.benchmark.utils.ExtractReuters reuters-out

reuters-text

#2 Copy the file to your HDFS

bin/hadoop fs -copyFromLocal

/home/bigdata/mahout-distribution-0.7/examples/reuters-text

hdfs://localhost:54310/user/bigdata/

#3 Generate sequence-file

mahout seqdirectory -i hdfs://localhost:54310/user/bigdata/reuters-text

-o hdfs://localhost:54310/user/bigdata/reuters-seqfiles -c UTF-8 -chunk 5

-chunk → specifying the number of data blocks

UTF-8 → specifying the appropriate input format

#4 Check the generated sequence-file

mahout-0.7$ ./bin/mahout seqdumper -i

/your-hdfs-path-to/reuters-seqfiles/chunk-0 |less

#5 From sequence-file generate vector file

mahout seq2sparse -i

hdfs://localhost:54310/user/bigdata/reuters-seqfiles -o

hdfs://localhost:54310/user/bigdata/reuters-vectors -ow

-ow → overwrite

#6 Take a look at it should have 7 items by using this command

bin/hadoop fs -ls

reuters-vectors/df-count

reuters-vectors/dictionary.file-0

reuters-vectors/frequency.file-0

reuters-vectors/tf-vectors

reuters-vectors/tfidf-vectors

reuters-vectors/tokenized-documents

reuters-vectors/wordcount

bin/hadoop fs -ls reuters-vectors

#7 Check the vector: reuters-vectors/tf-vectors/part-r-00000

mahout-0.7$ hadoop fs -ls reuters-vectors/tf-vectors

#8 Run canopy clustering to get optimal initial centroids for k-means

mahout canopy -i

hdfs://localhost:54310/user/bigdata/reuters-vectors/tf-vectors -o

hdfs://localhost:54310/user/bigdata/reuters-canopy-centroids -dm

org.apache.mahout.common.distance.CosineDistanceMeasure -t1 1500 -t2 2000

-dm → specifying the distance measure to be used while clustering (here it is cosine distance measure)

#9 Run k-means clustering algorithm

mahout kmeans -i

hdfs://localhost:54310/user/bigdata/reuters-vectors/tfidf-vectors -c

hdfs://localhost:54310/user/bigdata/reuters-canopy-centroids -o

hdfs://localhost:54310/user/bigdata/reuters-kmeans-clusters -cd 0.1 -ow

-x 20 -k 10

-i → input

-o → output

-c → initial centroids for k-means (not defining this parameter will

trigger k-means to generate random initial centroids)

-cd → convergence delta parameter

-ow → overwrite

-x → specifying number of k-means iterations

-k → specifying number of clusters

#10 Export k-means output using Cluster Dumper tool

mahout clusterdump -dt sequencefile -d hdfs://localhost:54310/user/bigdata/reuters-vectors/dictionary.file-*

-i hdfs://localhost:54310/user/bigdata/reuters-kmeans-clusters/clusters-8-

final -o clusters.txt -b 15

-dt → dictionary type

-b → specifying length of each word

The output would look like this (note: this screenshot was on real life document clustering (with big data) and not reuters example):

Hello,

Thanks for this clear explanation. I just have one questions if you do not mind:

. After Kmeans completion, how to create the clusters with original data (words for example not numbers) ? Which command is responsible for that ? is it Seqdumper or Clusterdump ?

Thanks.

Its cluster dump utility.